-

Why you Should Invest in Healthcare Cybersecurity It’s hard to imagine anything more cynical than holding a hospital to ransom, but that is exactly what’s happening with growing frequency. The healthcare sector is a popular target for cybercriminals.…

-

The Disconnect between AI Hype and ML Reality: Implications for Business Operations You might think that news of “major AI breakthroughs” would do nothing but help machine learning’s (ML) adoption. If only. Even before the latest splashes — most notably…

-

Data Disasters: 8 Infamous Analytics and AI Failures Insights from data and machine learning algorithms can be invaluable, but mistakes can cost you reputation, revenue, or even lives. These high-profile analytics and AI blunders illustrate what can go wrong.…

-

Chatbots and their Struggle with Negation Today’s language models are more sophisticated than ever, but they still struggle with the concept of negation. That’s unlikely to change anytime soon. Nora Kassner suspected her computer wasn’t as smart as people…

-

Helping Business Executives Understand Machine Learning For data science teams to succeed, business leaders need to understand the importance of MLops, modelops, and the machine learning life cycle. Try these analogies and examples to cut through the jargon.…

-

Natural Language Processing is the New Revolution in Healthcare AI After a late start, Artificial Intelligence (AI) has made massive inroads in penetrating the pharmaceutical and healthcare industries. Nearly all aspects of the industry have felt impacts from…

-

AI: The Game Changer in Online Businesses AI technology is unquestionably changing the future of business. A growing number of companies are revamping their online business models to deal with new AI tools. Therefore, it should not be surprising to hear that…

-

A Closer Look at Generative AI Artificial intelligence is already designing microchips and sending us spam, so what's next? Here's how generative AI really works and what to expect now that it's here. Generative AI is an umbrella term for any kind of…

-

Exploring the Dangers of Chatbots AI language models are the shiniest, most exciting thing in tech right now. But they’re poised to create a major new problem: they are ridiculously easy to misuse and to deploy as powerful phishing or scamming tools. No…

-

The difference between structured and unstructured data Structured data and unstructured data are both forms of data, but the first uses a single standardized format for storage, and the second does not. Structured data must be appropriately formatted (or…

-

The increasing use of AI-driven chatbots for customer service in Ecommerce AI-powered chatbots have revolutionized the way Ecommerce businesses handle customer service. With the ability to provide immediate responses and resolutions, chatbots ensure that…

-

The Advantages of Python for Businesses Enterprises are making a foray into the digital realm to be a part of digitization. To set up their foot in the digital industry, they need to choose a portable, scalable, flexible, and high-level programming language…

-

Should You Use an AI Writer? When it comes to writing, there are many different types of writers. Some write for pleasure, some write to inform, and some write to sell. But what happens when the work needs to be done, and there just isn’t enough time or…

-

Functions and applications of generative AI models Learn how industries use generative AI models, which function on their own to create new content and alongside discriminative models to identify, for example, 'real' vs. 'fake.' AI encompasses many techniques…

-

The role and the value of the machine learning engineer What is a machine learning engineer? An ML engineer is a professional in the field of information technology who specializes in developing self-contained artificial intelligence (AI) systems that…

-

How conversational AI tools can contribute to business performance Conversational AI tools have traditionally been limited in scope, but as they become more humanlike, businesses have realized their potential and applied them to more use cases. As is the case…

-

MLOps in a Nutshell Data is becoming more complex, and so are the approaches designed to process it. Companies have access to more data than ever, but many still struggle to glean the full potential of insights from what they have. Machine learning has…

-

The Future of AI: Setting the Priorities Leaders should focus on these three priorities to ensure their AI initiatives provide business value and do so ethically. An artificial intelligence (AI) algorithm designed to scan electronic medical records for…

-

The future of AI and the key of human interaction Artificial intelligence technology is evolving at a faster pace than ever, largely due to human powered data. Artificial Intelligence (AI) has significantly altered how work is done. However, AI even has a…

-

Is the first autonomous aircraft with an AI pilot in the making? A team of researchers at Carnegie Mellon University believe they have developed the first AI pilot that enables autonomous aircraft to navigate crowded airspace. AI pilot could eventually pass…

-

How Data Science is Changing the Entertainment Industry Beyond how much and when, to what we think and how we feel Like countless other industries, the entertainment industry is being transformed by data. There’s no doubt data has always played a role in…

-

Data wrangling in SQL: 5 recommended methods Data wrangling is an essential job function for data engineering, data science, or machine learning roles. As knowledgable coders, many of these professionals can rely on their programming skills and help from…

-

The Bright Future of SQL Machine learning, big data analytics or AI may steal the headlines, but if you want to hone a smart, strategic skill that can elevate your career, look no further than SQL. Data engineering and data science are fast-moving,…

-

Green AI: how AI poses both problems and solutions regarding climate change AI and ML are making a significant contribution to climate change. Developers can help reverse the trend with best practices and tools to measure carbon efficiency. The growth of…

-

The 6 abilities of the perfect data scientist There are currently over 400K job openings for data scientists on LinkedIn in the U.S. alone. And, every single one of these companies wants to hire that magical unicorn of a data scientist that can do it all.…

-

The Language of Data Science: Python vs R Python may be the second choice to R, but its popularity and ease of use positions it to dominate data science. “When [Netflix’s data science team] started, there was one single kind of data scientist,” says Christine…

-

The Essence of Data Annotation in Machine Learning Data annotation in machine learning is a term used to describe the process of labeling data in a way that machines can understand, either through computer vision or natural language processing (NLP). Another…

-

Why Robots Aren't here to Replace Humans, but to Complement Them You’ve heard the saying “if you do what you love, you’ll never work a day in your life,” right? Well, I hate to say it, but that’s me. I never dreamed that I would wind up in a field that…

-

How Artificial Intelligence could drive the Electric Vehicles market Artificial intelligence (AI) is rapidly evolving and becoming ubiquitous across virtually every industry. AI solutions allow organizations to achieve operational efficiencies, gain insights…

-

The Impact of Predictive Analytics on Developments in Mobile Phone Tracking Predictive analytics technology continues to shape the world in surprising new ways. One of the trends that few people have talked about is the role of predictive analytics in the…

-

Understanding Natural Language Processing Terms This post provides a concise overview of 18 natural language processing terms, intended as an entry point for the beginner looking for some orientation on the topic. At the intersection of computational…

-

Q&A: How does AI technology affect climate change? With more companies building supercomputers and infrastructure that requires a lot of compute power, AI may be doing more harm than good to the planet. Severe wildfires, raging storms and other extreme…

-

AI in civil engineering: fundamentals, applications and developments Artificial intelligence has always been a far-reaching manifold technology with limitless potential across industries. AI in civil engineering took a central stage a long time ago with the…

-

The Growing Influence of Ethical AI in Data Science Industries such as insurance that handle personal information are paying more attention to customers’ desire for responsible, transparent AI. AI (artificial intelligence) is a tremendous asset to companies…

-

How do automation and robotics differ? We live in a tech-driven world, but with all the technologies and innovative solutions at our disposal, it can be difficult to figure out what tech solutions are right for your business. Oftentimes, business leaders who…

-

Key differences between Business Intelligence and Data Science Cloud computing and other technological advances have made organizations focus more on the future rather than analyze the reports of past data. To gain a competitive business advantage, companies…

-

The role of machine learning in making homes smart Smart home automation has become quite popular in recent years, moving from a luxury for the rich to a staple in many homes. The most popular smart home devices are speakers and thermostats, but a growing…

-

Machine learning in the agricultural sector Food is a basic need of human beings that is now satisfied through farming. Machine learning in agriculture can optimize the way food gets to our table and revolutionize one of the most critical sectors of the…

-

Edge computing in a Nutshell Edge computing (EC) allows data generated by the Internet of Things (IoT) to be processed near its source, rather than sending the data great distances, to data centers or a cloud. More specifically, edge computing uses a network…

-

The biggest challenges for AI in finance Education, explainability, privacy and integration are some of the problems institutions face when implementing machine learning tools and technology. From credit cards to title insurance to loans and even fraud and…

-

How AI reinforces stereotypes through biased data Artificial intelligence (AI) software systems have been under attack for years for systematizing bias against minorities. Banks using AI algorithms for mortgage approvals are systematically rejecting minority…

-

Building Your Data Structure: FAIR Data Obtaining access to the right data is a first, essential step in any Data Science endeavour. But what makes the data “right”? The difference in datasets Every dataset can be different, not only in terms of content, but…

-

Do We Need Decision Scientists? Twenty years ago, there was a great reckoning in the market research industry. After spending decades mastering the collection and analysis of survey data, the banality of research-backed statements like “consumers don’t like…

-

Data labeling: the key to AI success In this article, Carlos Melendez, COO, Wovenware, discusses best practices for “The Third Mile in AI Development” – the huge market subsector in data labeling companies, as they continue to come up with new ways to…

-

Should we care more about ethics in a data science environment? The big idea Undergraduate training for data scientists - dubbed the sexiest job of the 21st century by Harvard Business Review - falls short in preparing students for the ethical use of data…

-

The Short-Term Future of Natural Language Processing Natural language processing research and applications are moving forward rapidly. Several trends have emerged on this progress, and point to a future of more exciting possibilities and interesting…

-

How people from different backgrounds are entering the data science field Data science careers used to be extremely selective and only those with certain types of traditional credentials were ever considered. While some might suggest that this discouraged…

-

Trendsetting Applications of AI in Healthcare We live in a digital age, so it’s not surprising that the healthcare industry follows suit. From the data-driven insights of wearables to mobile apps that help manage chronic conditions, it’s clear that technology…

-

The benefits of AI in CRM AI has the power to liberate organizations from CRM-related manual processes and improve customer engagement, sales insights, and social networking, for starters. Customer relationship management systems have become indispensable…

-

Deepfake Neural Networks: What are GANs? Generative adversarial networks (GANs) are one of the newer machine learning algorithms that data scientists are tapping into. When I first heard it, I wondered how can networks be adversarial? I envisioned networks…

-

Using Artificial Intelligence to see future virus threats coming Researchers use machine learning algorithms in novel approach to finding future zoonotic virus threats. Most of the emerging infectious diseases that threaten humans – including coronaviruses –…

-

Predictive Analytics: Maximizing visitors while preserving nature? The last years have shown that more and more people visit the Veluwe. The region is well known for its diversity: you can go for a peaceful hike, cultural activity or bike tour. The leisure…

-

Moving Towards Data Science: Hiring Your First Data Scientist In October 2020 I joined accuRx as the company’s first data scientist. At the time of joining, accuRx was a team of 60-odd employees who had done an incredible job relying on intuition and a…

-

Zooming In On The Data Science Pipeline Finding the right data science tools is paramount if your team is to discover business insight. Here are five things to look for when you search for your next data science platform. If you are old enough to have grown…

-

How algorithms mislead the human brain in social media - Part 2 If you haven't read part 1 of this article yet, be sure to check it out here. Echo Chambers Most of us do not believe we follow the herd. But our confirmation bias leads us to follow others who…

-

How algorithms mislead the human brain in social media - Part 1 Consider Andy, who is worried about contracting COVID-19. Unable to read all the articles he sees on it, he relies on trusted friends for tips. When one opines on Facebook that pandemic fears are…

-

Predicting Student Success In The Digital Era I had the pleasure of moderating a webinar focusing on the work of two Pivotal data scientists working with a prestigious mid-west university to use data to predict student success.It’s a topic that has long…

-

Essential Data Science Tools And Frameworks The fields of data science and artificial intelligence see constant growth. As more companies and industries find value in automation, analytics, and insight discovery, there comes a need for the development of new…

-

Getting Your Machine Learning Model To Production: Why Does It Take So Long? A Gentle Guide to the complexities of model deployment, and integrating with the enterprise application and data pipeline. What the Data Scientist, Data Engineer, ML Engineer, and ML…

-

How processing speed defines who is successful in data science For close to two decades, companies like Facebook, Amazon, JP Morgan and Uber have been writing the book on how to successfully use data science to grow their businesses. Thanks to these…

-

How Machine Learning is Taking Over Wall Street Well-funded financial institutions are in a perpetual tech arms race, so it’s no surprise that machine learning is shaking up the industry. Investment banking, hedge funds, and similar entities are employing the…

-

Dataiku door Snowflake benoemd tot Data Science Partner of the Year Uitstekende prestaties op het gebied van technische integraties, technische vaardigheden, samenwerkingsopties en sales traction Dataiku heeft op de virtual partner summit van Snowflake de…

-

Becoming a better data scientist by improving your SQL skills Learning advanced SQL skills can help data scientists effectively query their databases and unlock new insights into data relationships, resulting in more useful information. The skills people most…

-

Running a tech company? Use data science and AI to improve There are a lot of great benefits of artificial intelligence with startups that can't be overlooked. As a tech company, you will always be looking for ways to develop. Using data science and…

-

Finding your way in programming: top 10 languages summarize The landscape of programming languages is rich and expanding, which can make it tricky to focus on just one or another for your career. We highlight some of the most popular languages that are…

-

Different Roles in Data Science In this article, we will have a look at five distinct data careers, and hopefully provide some advice on how to get one's feet wet in this convoluted field. The data-related career landscape can be confusing, not only to…

-

DataRobot actief in AI-initiatief World Economic Forum Voor rechtvaardigheid, verantwoording en transparantie van Artificial Intelligence DataRobot, snelgroeiende leverancier van enterprise AI, heeft zich aangesloten bij een nieuw initiatief van het World…

-

How the skillset of data scientists will change over the next decade AutoML is poised to turn developers into data scientists — and vice versa. Here’s how AutoML will radically change data science for the better. In the coming decade, the data scientist role…

-

Reducing CO2 wth the help of Data Science Optimize operations by shifting loads in time and space Data scientists have much to contribute to climate change solutions. Even if your full-time job isn’t working on climate, data scientists who work on a company’s…

-

How valuable is your data science project really? An evaluation guide Performance metrics can’t tell you what you want to know: how valuable a project actually is There is a big focus in data science on various performance metrics. Data scientists will spend…

-

Less is More: Confusion in AI Describing Terms An overview of the competing definitions for addressing AI’s challenges There are a lot of people out there working to make artificial intelligence and machine learning suck less. In fact, earlier this year I…

-

Context & Uncertainty in Web Analytics Trying to make decisions with data “If a measurement matters at all, it is because it must have some conceivable effect on decisions and behaviour. If we can’t identify a decision that could be affected by a proposed…

-

Moving your projects to the cloud, but why? Understanding the cloud main advantages and disadvantages In this article, we are going to change the context slightly. In the last articles, we have been talking about data management, the importance of data…

-

Preserving privacy within a population: differential privacy In this article, I will present the definition of differential privacy and preserving privacy and personal data of users while using their data in training machine learning models or driving…

-

Determining the feature set complexity Thoughtful predictor selection is essential for model fairness One common AI-related fear I’ve often heard is that machine learning models will leverage oddball facts buried in vast databases of personal information to…

-

The requirements of a good big data architect In order to be an excellent big data architect, it is essential to be a useful data architect; both these things are different. Let's take a look! Big data that is both structured and non-structured. While it…

-

7 trends that will emerge in the 2021 big data industry “The best-laid plans of mice and men often go amiss” – a saying by poet Robert Burns. In January 2020, most businesses laid out ambitious plans, covering a complete roadmap to steer organizations through…

-

The increasing role of AI in regulation How the Biden administration will change the AI playing field, and what you should be doing now. With President Biden having made some important appointments recently, there’s a lot of speculation about what we can…

-

Big Tech: the battle for our data The most important sector of tech is user privacy and with it comes a war not fought in the skies or trenches but in congressional hearings and slanderous advertisements, this battle fought in the shadows for your data and…

-

Reusing data for ML? Hash your data before you create the train-test split The best way to make sure the training and test sets are never mixed while updating the data set. Recently, I was reading Aurélien Géron’s Hands-On Machine Learning with Scikit-Learn,…

-

Augmented analytics: when AI improves data analytics Augmented analytics: the combination of AI and analytics is the latest innovation in data analytics. For organizations, data analysis has evolved from hiring “unicorn” data scientists – to having smart…

-

How Big Data leaves its mark on the banking industry Did you know that big data can impact your bank account, and in more ways than one? Here's what to know about the role big data is playing in finance and within your local bank. Nowadays, terms like ‘Data…

-

The uses of artificial intelligence when managing legal contracts Legal contracts constitute the foundation upon which the dynamic processes that empower organizations and their interactions with each other transpire in the world of commerce. Contracts move…

-

A word of advice to help you get your first data science job Creativity, grit, and perseverance will become the three words you live by Whether you’re a new graduate, someone looking for a career change, or a cat similar to the one above, the data science…

-

The right tools for citizen data scientists When Gartner talks about expanding data analytics to business users, they also talk about how the analytical tools must be suitable for business users. If a business wants to implement a citizen data scientist…

-

How data can aid young homeless people What comes to mind when you think of a “homeless person”? Chances are, you’llpicture an adult, probably male, dirty, likely with some health conditions, including a mental illness. Few of us would immediately recall…

-

9 Data issues to deal with in order to optimize AI projects The quality of your data affects how well your AI and machine learning models will operate. Getting ahead of these nine data issues will poise organizations for successful AI models. At the core of…

-

Applying data science to battle childhood cancer Acute myeloid leukaemia in children has a poor prognosis and treatment options unchanged for decades. One collaboration is using data analytics to bring a fresh approach to tackling the disease. Acute myeloid…

-

How to use data science to get the most useful insights out of your data Big data has been touted as the answer to many of the questions and problems businesses have encountered for years. Granular touch-points should simplify making predictions, solving…

-

The economic data behind major sports contracts Professional sports lend themselves really well to economic calculations: players, coaches, and agents act similarly to the hypothetical, rational decision-makers in economic models. While this data may seem…

-

BERT-SQuAD: Interviewing AI about AI If you’re looking for a data science job, you’ve probably noticed that the field is hyper-competitive. AI can now even generate code in any language. Below, we’ll explore how AI can extract information from paragraphs to…

-

The differences in AI applications at the different phases of business growth We see companies applying AI solutions differently, depending on their growth stage. Here are the challenges they face and the best practices at each stage. A growing number of…

-

3 AI and data science applications that can help dealing with COVID-19 All industries already feel the impact of the current COVID-19 pandemic on the economy. As many businesses had to shut down and either switch to telework or let go of their entire staff,…

-

What is edge intelligence and how to apply it? The term “edge intelligence,” also referred to as “intelligence on the edge,” describes a new phase in edge computing. Organizations are using edge intelligence to develop smarter factory floors, retail…

-

Data science plays key role in COVID-19 research through supercomputers Supercomputers, AI and high-end analytic tools are each playing a key role in the race to find answers, treatments and a cure for the widespread COVID-19. In the race to flatten the curve…

-

Data science community to battle COVID-19 via Kaggle A challenge on the data science community site Kaggle is asking great minds to apply machine learning to battle the COVID-19 coronavirus pandemic. As COVID-19 continues to spread uncontrolled around the…

-

Why communication on algorithms matters The models you create have real-world applications that affect how your colleagues do their jobs. That means they need to understand what you’ve created, how it works, and what its limitations are. They can’t do any of…

-

How to prevent and deal with big data breaches On average, every data breach affects about 25,000 records and costs the affected organization almost $4 million. This cost comes in the form of brand damage, loss of customer trust, and regulatory fines. As data…

-

Three variants of machine learning fortifying AI deployments Although machine learning is an integral component of AI (Artificial Intelligence), it’s critical to realize that it’s just one of the many dimensions of this collection of technologies. Expressions…

-

9 Tips to become a better data scientist Over the years I worked on many Data Science projects. I remember how easy it was to get lost and waste a lot of energy in the wrong direction. In time, I learned what works for me to be more effective. This list is my…

-

Women in data science: what developments do we see? As you look around in our society today, one thing is clear: technology is king and data science and analytics are the building blocks of the majority of modern industry. And with that comes all sorts of…

-

Keeping your data safe in an era of cloud computing These cloud security practices for 2020 are absolutely essential to keep your data safe and secure in this new decade. In recent years, cloud computing has gained increasing popularity and proved its…

-

How to use AI image recognition responsibly? The use of artificial intelligence (AI) for image recognition offers great potential for business transformation and problem-solving. But numerous responsibilities are interwoven with that potential. Predominant…

-

What to expect from data decade 2020-2030? From wild speculation that flying cars will become the norm to robots that will be able to tend to our every need, there is lots of buzz about how AI, Machine Learning, and Deep Learning will change our lives.…

-

Why machine learning has a major impact on all industries Machine learning is having a major impact on the global marketplace. It will have a profound effect on companies of all sizes over the next few years. Artificial Intelligence is surrounding us…

-

How autonomous vehicles are driven by data Understanding how to capture, process, activate, and store the staggering amount of data each vehicle is generating is central to realizing the future of autonomous vehicles (AVs). Autonomous vehicles have long been…

-

5 Astonishing IoT examples in civil engineering The internet of things is making a major impact on the field of civil engineering, and these five examples of IoT applications in civil engineering are fascinating. As the Internet of Things (IoT) becomes…

-

Top artificial intelligence trends for 2020 Top AI trends for 2020 are increased automation to extend traditional RPA, deeper explainable AI with more natural language capacity, and better chips for AI on the edge. The AI trends 2020 landscape will be…

-

How automated data analytics can improve performance Data, data, data. Something very valuable to brands. They need it in order to make informed decisions and in the long term, make their brand grow. That part is probably common knowledge, right? What you are…

-

Talend: A personal data lovestory When I was in my mid-twenties, I thought I had it all. I had just recently graduated from a top law school, passed the California Bar Exam, and was working as a junior associate at a prestigious San Francisco law firm. Three…

-

Why the right data input is key: A Machine Learning example Finding the ‘sweet spot’ of data needs and consumption is critical to a business. Without enough, the business model under performs. Too much and you run the risk of compromised security and…

-

Connection between human and artificial intelligence moving closer to realization What was once the stuff of science fiction is now science fact, and that’s a good thing. It is heartening to hear how personal augmentation with robotics is changing people’s…

-

The partnership between IT and Data Science Data science and IT no longer are separate disciplines. Think of it as a partnership. The data science world in its most puristic state is populated by parallel processing servers that primarily run Hadoop and…

-

Machines vormen de toekomst van klantervaringen Wereldwijd onderzoek door Futurum Research in opdracht van SAS laat zien dat 67% van alle interacties tussen klanten en bedrijven door slimme machines wordt afgehandeld in 2030. Uit het onderzoek blijkt dat…

-

Preventing fraud by using AI technology As fraudsters become increasingly more professional and technologically advanced, financial organizations need to rely on products that use artificial intelligence (AI) for to prevent fraud. Identity verification…

-

Machine learning: definition and opportunities What is machine learning? Machine learning is an application of artificial intelligence (AI) that gives computers the ability to continually learn from data, identify patterns, make decisions and improve from…

-

Four important drivers of data science developments According to the Gartner Group, digital business reached a tipping point last year, with 49% of CIOs reporting that their enterprises have already changed their business models or are in the process of doing…

-

Is AI a threat or an opportunity to data engineers? Humans losing jobs to robots has been the preoccupation of economists and sci-fi writers alike for almost 100 years. AI systems are the next perceived threat to human jobs, but which jobs? Sourcing the logic…

-

What is the impact of AI on cybersecurity? In today's technology-driven world we are becoming increasingly dependent on various technological tools to help us finish everyday tasks much faster or even do them for us, artificial intelligence being the most…

-

How serverless machine learning and choosing the right FaaS benefit AI development Getting started with machine learning throws multiple hurdles at enterprises. But the serverless computing trend, when applied to machine learning, can help remove some…

-

The role of Machine Learning in the development of text to speech technology Machine learning is drastically advancing the development of text to speech technology. Here's how, and why it's so important. Machine learning has played a very important role in…

-

Topic modeling and the need for humanity in data science In fields involved with knowledge production, unsupervised machine-learning algorithms are becoming the standard. These algorithms allow us to statistically analyze data sets that exceed traditional…

-

Ethical insights on the future of Generation AI Millions of our youngest people, children under 10 years old, are exposed to misleading and biased uses of artificial intelligence. What will you do about it? We are a digital society in transition: children…

-

7 Personality assets required to be successful in data and tech If you look at many of the best-known visionaries, such as Richard Branson, Elon Musk, and Steve Jobs, there are certain traits that they all have which are necessary for being successful. So…

-

Technology advancements: a blessing and a curse for cybersecurity With the ever-growing impact of big data, hackers have access to more and more terrifying options. Here's what we can do about it. Big data is the lynchpin of new advances in cybersecurity.…

-

The persuasive power of data and the importance of data integrity Data is like statistics: a matter of interpretation. The process may look scientific, but that does not mean the result is credible or reliable. How can we trust what a person says if we deny…

-

SAS: 4 real-world artificial intelligence applications Everyone is talking about AI (artificial intelligence). Unfortunately, a lot of what you hear about AI in movies and on the TV is sensationalized for entertainment. Indeed, AI is overhyped. But AI is also…

-

How AI is influencing web design Artificial intelligence in web design is making a major impact. This is what to know about how it works and how effective it can be. When Alan Turing invented the first intelligent machine, few could have predicted that the…

-

What is Machine Learning? And which are the best practices? As machine learning continues to address common use cases it is crucial to consider what it takes to operationalize your data into a practical, maintainable solution. This is particularly important…

-

Staying on the right track in the era of big data Volume dominates the multidimensional big data world. The challenge many organizations today are facing is harnessing the potential of the data and applying all of the usual methods and technologies at scale.…

-

Why human-guided machine learning is the way to go Every data consumer wants to believe that the data they are using to make decisions is complete and up-to-date. However, this is rarely the case. Customers change their address, products experience revisions,…

-

De impact van 5G op de ontwikkelingen in moderne technologie Van opvouwbare 5G-telefoons tot operaties die worden uitgevoerd op een patiënt op kilometers afstand, en van vroege supersnelle netwerkuitrol in de VS tot tactiele internet en Internet of Things, en…

-

This year's top 10 data events No matter what industry you're in, if you're not investigating ways to use big data and AI to boost your business, you're missing out on a huge opportunity. Whether you're a seasoned pro or a beginner in the field, check out our…

-

Why we should be aware of AI bias in lending It seems that beyond all the hype AI (artificial intelligence) applications in lending do speed up and automate decision-making. Indeed, a couple of months ago Upstart, an AI-leveraging fintech startup, announced…

-

What are smart cities and what to expect from them? At B2B International, the latest innovations and emerging ‘megatrends’ shaping industries and markets are an important topic. So, for every month in 2019 they decided to delve a little deeper into each of…

-

Toegang tot RPA-bots in Azure dankzij Automation Anywhere Automation Anywhere heeft het mogelijk gemaakt om toegang te krijgen tot zijn Robotic Process Automation (RPA)-bots vanuit Azure. Het bedrijf stelt dat er een uitgebreide samenwerking is opgezet met…

-

De uitdaging van het structuur aanbrengen in ongestructureerde data De wereld verzamelt steeds meer data, en met een onrustbarend groeiende snelheid. Vanaf het begin van de beschaving tot ongeveer 2003 produceerde de mensheid zo’n 5 exabyte aan data. Nu…

-

Pattern matching: The fuel that makes AI work Much of the power of machine learning rests in its ability to detect patterns. Much of the basis of this power is the ability of machine learning algorithms to be trained on example data such that, when future…

-

Data as a universal language You don’t have to look very far to recognize the importance of data analytics in our world; from the weather channel using historical weather patterns to predict the summer, to a professional baseball team using on-base plus…

-

Microsoft takes next cybersecurity step Microsoft just announced they are dropping the password-expiration policies that require periodic password changes in Windows 10 version 1903 and Windows Server version 1903. Microsoft explains in detail this new change…

-

The 8 most important industrial IoT developments in 2019 From manufacturing to the retail sector, the infinite applications of the industrial internet of things (IIoT) are disrupting business processes, thereby improving operational efficiency and business…

-

Chatbots, big data and the future of customer service The rise and development of big data has paved the way for an incredible array of chatbots in customer service. Here's what to know. Big data is changing the direction of customer service. Machine learning…

-

Why caution is recommended when using analytics for censorship Historically, concerns about over-zealous censorship have focused on repressive governments. In the United States (and many other countries), free speech has been a pillar of society since its…

-

How artificial intelligence will shape the future of business From the boardroom at the office to your living room at home, artificial intelligence (AI) is nearly everywhere nowadays. Tipped as the most disruptive technology of all time, it has already…

-

The ability to speed up the training for deep learning networks used for AI through chunking At the International Conference on Learning Representations on May 6, IBM Research shared a look around how chunk-based accumulation can speed the training for deep…

-

The data management issue in the development of the self-driving car Self-driving cars and trucks once seemed like a staple of science fiction which could never morph into a reality here in the real world. Nevertheless, the past few years have given rise to a…

-

The massive impact of data science on the web development business “A billion hours ago, modern Homo sapiens emerged.A billion minutes ago, Christianity began.A billion seconds ago, the IBM personal computer was released.A billion Google searches ago… was…

-

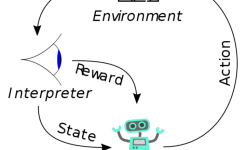

A brief look into Reinforcement Learning Reinforcement Learning (RL) is a very interesting topic within Artificial Intelligence, and the concept is quite fascinating. In this post I will try to give a nice initial picture for those who want to know more about…

-

Intelligence, automation, or intelligent automation? There is a lot of excitement about artificial intelligence (AI), and also a lot of fear. Let’s set aside the potential for robots to take over the world for the moment and focus on more realistic fears.…

-

Multi-factor authentication and the importance of big data Big data is making a very big impact on multi-factor authentication solutions. Here's how and why this is so important to consider. Big data is already playing an essential role in authentication, and…

-

Why data is key in driving Robotic Process Automation Enterprise technology innovation and investments are typically driven by compelling events in an organization, especially in the areas of computing and machinery, as companies seek to do business faster…

-

How to create a trusted data environment in 3 essential steps We are in the era of the information economy. Nowadays, more than ever, companies have the capabilities to optimize their processes through the use of data and analytics. While there are endless…

-

Top 4 e-mail tracking tools using big data Big data is being incorporated in many aspects of e-mail marketing. It has made it surprisingly easy for organizations to track the performance of e-mail marketing campaigns in fascinating ways. How big data changes…

-

Recommending with SUCCES as a data scientist Have you ever walked an audience through your recommendations only to have them go nowhere? If you’re like most data scientists, chances are that you’ve been in this situation before. Part of the work of a data…

-

Big data and the future of the self-driving car Each year, car manufacturers get closer to successfully developing a fully autonomous vehicle. Over the last several years, major tech companies have paired up with car manufacturers to develop the advanced…

-

An overview of Morgan Stanley's surge toward data quality Jeff McMillan, chief analytics and data officer at Morgan Stanley, has long worried about the risks of relying solely on data. If the data put into an institution's system is inaccurate or out of date,…

-

Gaining control of big data with the help of NVMe Every day there is an unfathomable amount of data, nearly 2.5 quintillion bytes, being generated all around us. Part of the data being created we see every day, such as pictures and videos on our phones,…

-

Effective data analysis methods in 10 steps In this data-rich age, understanding how to analyze and extract true meaning from the digital insights available to our business is one of the primary drivers of success. Despite the colossal volume of data we…

-

Facing the major challenges that come with big data Worldwide, over 2.5 quintillion bytes of data are created every day. And with the expansion of the Internet of Things (IoT), that pace is increasing. 90% of the current data in the world was generated in the…

-

Machine learning, AI, and the increasing attention for data quality Data quality has been going through a renaissance recently. As a growing number of organizations increase efforts to transition computing infrastructure to the cloud and invest in…

-

Why trusting your data is key in optimizing analytics With the emergence of self-service business intelligence tools and platforms, data analysts and business users are now empowered to unearth timely data insights on their own and make impactful decisions…

-

E-commerce and the growing importance of data E-commerce is claiming a bigger role in global retail. In the US for example, e-commerce currently accounts for approximately 10% of all retail sales, a number that is projected to increase to nearly 18% by 2021.…

-

The amount of data that created nowadays is incredible. The amount and importance of data is ever growing, and with that the need for analyzing and identifying patterns and trends in data becomes critical for businesses. Therefore, the need for big data…

-

Data centers have become a core component of modern living, by containing and distributing the information required to participate in everything from social life to economy. In 2017, data centers consumed 3 percent of the world’s electricity, and new…

-

It’s no secret that data scientists still have one of the best jobs in the world. With the amount of data growing exponentially, the profession shows no signs of slowing down. Data scientists have a base salary of $117,000 and high job satisfaction levels,…

-

RapidMiner, TIBCO Software, SAS and KNIME are among the leading providers of data science and machine learning products, according to the latest Gartner Magic Quadrant report. About this Magic Quadrant report Gartner Inc. has released its "Magic Quadrant for…

-

RapidMiner, TIBCO Software, SAS and KNIME are among the leading providers of data science and machine learning products, according to the latest Gartner Magic Quadrant report. About this Magic Quadrant report Gartner Inc. has released its "Magic Quadrant for…

-

Er gaat momenteel geen dag voorbij of er is in de media wel een bericht of discussie te vinden rond data. Of het nu gaat om vraagstukken rond privacy, nieuwe mogelijkheden en bedreigingen van Big Data, of nieuwe diensten gebaseerd op het slim combineren en…

-

Het Centraal Bureau voor de Statistiek (CBS) start eind september met een Center for Big Data Statistics. Doel is het ontwikkelen van nieuwe oplossingen op basis van big data-technologie. Het nieuwe expertisecenter van het CBS gaat samenwerken met diverse…

-

Wie ooit Grand Theft Auto (GTA) heeft gespeeld, weet dat de game niet is gemaakt om je aan de regels te houden. Toch kan GTA volgens onderzoekers van de Technische Universiteit Darmstadt een kunstmatige intelligentie helpen om te leren door het verkeer te…

-

De Amsterdam ArenA opent vanmiddag haar deuren voor de eerste zogenoemde Big Data Hub van Nederland. Ondernemers kunnen daar met overheden en wetenschappers data delen en data-gedreven innovaties ontwikkelen. Op allerlei terrein: van entertainment tot…

-

There’s growing interest in using big data for business localization now, although the use of customer data for optimal orientation of busi ness locations and promotions has been around for at least a decade. There’s growing interest in using big data for…

-

Organisaties die al jaren ervaring hebben met de inzet van datawarehouses en Business Intelligence gaan steeds vaker Data Science-toepassingen ontwikkelen. Dat is logisch, want data heeft een impact op iedere organisatie; van retailer, reisorganisatie en…

-

Dell Services chief medical officer Dr. Nick van Terheyden explains the 'mind blowing' impact big data is having on the healthcare sector in both developing and developed countries. For a long time, doctors have been able to diagnose people with diabetes—one…

-

DeepMind, een van de dochterbedrijven van zoekgigant Google, die onderzoek doet naar zelflerende computers, gaat helpen bij onderzoek naar blindheid. DeepMind gaat samenwerken met de Britse gezondheidsorganisatie NHS om zijn technologie te leren de eerste…

-

Hij is een man met een missie. En geen geringe: hij wil samen met patiënten, de zorgverleners en verzekeraars een omslag in de gezondheidszorg bewerkstelligen, waarbij de focus verlegd wordt van het managen van ziekte naar het managen van gezondheid. Jeroen…

-

De Radboud Management Academy heeft haar in Nederland zo succesvolle Business Data Scientist leergang nu ook in Belgie op de markt gebracht. In samenwerking met Business & Decision werd afgelopen week in het kantoor van Axa in Brussel een verkorte leergang…

-

Most companies today claim to be fluent in data, but as with most trends, these claims tend to be exaggerated. Com panies are high on data, but what does it mean to be a data-driven company? I went ahead and asked a number of business leaders. According to…

-

Wat als IoT gewoon een overkoepelende term zou zijn voor manieren om iets bruikbaars te maken uit machine-gegenereerde data? Bijvoorbeeld, een bus vertelt mijn telefoon hoe ver mijn bushalte is en mijn fietsverhuur vertelt me hoeveel fietsen beschikbaar…

-

Sollicitanten interviewen is tijdverspilling. Wie beschikt over voldoende historische data en de juiste rekenmodellen, kan uit een stapel cv’s haarfijn destilleren wie er het meest geschikt is voor een bepaalde vacature. Sterker nog: als een…

-

Data en apps hebben niets met elkaar te maken. En alles. Maar dan natuurlijk precies omgekeerd als veelal wordt gedacht. Verwarde mannen. Nergens kom je die zo vaak tegen als in de ict. Althans, dat denk ik wanneer ik intelligente mensen opvattingen hoor…

-

“Science has not yet mastered prophecy. We predict too much for the next year and yet far too little for the next ten.” These words, articulated by Neil Armstrong at a speech to a joint session of Congress in 1969, fit squarely into most every decade since…

-

Op 7 maart start de opleiding Data Science in Noord-Nederland. Om de al maar groeiende hoeveelheid data te managen leidt IT Academy Noord-Nederland professionals uit het Noorden op tot data scientist. Met geaccrediteerde vakken van de Hanzehogeschool…

-

Als we niet oppassen gaat het opnieuw helemaal verkeerd. Vendoren ontwikkelen big data-producten vaak als extra stap in het proces, wat dat proces er alleen maar complexer op maakt. Terwijl producten het proces nu juist zouden moeten versoepelen. Vijftien…

-

Business leaders know they want to invest in big data, and they have high expectations on ROI, but do they really know what big data is? In Gartner’s hype cycle, the term ‘big data’ was once a staple of the yearly report. It moved swiftly into the peak of…

-

Organisations of all shapes and sizes across the world are drowning in big data, there’s no doubt about it. Yet, with hard drives, servers, file cabinets and storage facilities across the UK at capacity, the volume of information being collected will only…

-

Innovatieorganisatie TNO ziet kansen voor big data en Internet of Things-technologie (IoT) om de bereikbaarheid van de metropoolregio Amsterdam te vergroten. “Met big data kunnen we meerdere oplossingen aan elkaar koppelen om de infrastructuur van een stad…

-

85% van de bedrijven slaat gevoelige data op in de cloud. Dit is een flinke stijging ten opzichte van de 54% die vorig jaar aangaf dit te doen. 70% van de bedrijven maakt zich zorgen over de veiligheid van deze data. Dit blijkt uit onderzoek van 451 Research…

-

Het Internet of Things brengt allerlei beveiligingsrisico's met zich mee, maar ervaren experts die hierbij kunnen helpen zijn schaars, zo stelt martkvorser Gartner. Volgens Gartner zijn beveiligingstechnologieën vereist om alle Internet of Things-apparaten…

-

Despite exponential increases in data storage in the cloud along with databases and the emerging Internet of Things (IoT), IT security executives remain worried about security breaches as well as vulnerabilities introduced via shared infrastructure. A cloud…

-

Big data is een fenomeen dat zichzelf moeilijk laat definiëren. Velen zullen gehoord hebben van de 3 V’s: volume, velocity en variety. Kortgezegd gaat big data over grote volumes, veel snelheid (realtime) en gevarieerde/ongestructureerde data. Afhankelijk van…

-

De ontwikkelingen op informatie-technologisch gebied gaan snel en misschien wel steeds sneller. We horen en zien steeds meer van business intelligence, self service BI, artificial intelligence en machine learning. We zien dit terug bij werknemers die steeds…

-

Steeds meer bedrijven in de VS werken samen met zorgverzekeraars en partijen die gezondheidsdata verzamelen om erachter te komen welke werknemers risico lopen om ziek te worden. Daarover schrijft The Wall Street Journal. Om de ziektekosten in de hand te…

-

Big data kan duizenden banen scheppen en miljarden euro’s aan omzet genereren. Van de kansen die big data biedt kan echter niet worden geprofiteerd zolang belangrijke zorgen op het gebied van privacy en beveiligen niet zijn verholpen. Dit meldt het Science…

-

De opkomst van robots en kunstmatige intelligentie zorgt ervoor dat steeds meer banen verdwijnen. We moeten ons nu al voorbereiden op massawerkloosheid, waarschuwen hoogleraren. We komen in een tijdperk waarin machines bijna alle menselijke taken kunnen…

-

Unexpected convergent consequences...this is what happens when eight different exponential technologies all explode onto the scene at once. This post (the second of seven) is a look at artificial intelligence. Future posts will look at other tech areas. An…

-

There won't be a 'big data revolution' until the public can be reassured that their data won't be misused. Big data is an asset which can create tens of thousands of jobs and generate hundreds of billions for the economy, but the opportunity can't be taken…

-

To really make the most of big data, most businesses need to invest in some tools or services - software, hardware, maybe even new staff - and there's no doubt that the costs can add up. The good news is that big data doesn't have to cost the Earth and a…

-

Companies are already actively using big data. They just don't call it that. While the phrase has problems, the technology is becoming more intrinsic to business. It turns out that no one knows what the heck big data is, and about the same number of companies…

-

Big Data has quickly become an established fact for Fortune 1000 firms — such is the conclusion of a Big Data executive survey that my firm has conducted for the past four years. The survey gathers perspectives from a small but influential group of executives…

-

Combining big data and the cloud is the perfect solution for a company's computing needs. A company's data often requires a computing environment which can quickly and effectively grow and flex, automatically accommodating large amounts of data. The cloud…

-

Much fanfare has been paid to the term “disruptive innovation” over the past few years. Professor Clayton M. Christensen has even re-entered the fold clarifying what he means when he uses the term. Despite the many differences in application, most people…